What is Gradient Boosting

Gradient Boosting is a sequential technique where new models are added to correct the errors made by existing models. Models are added sequentially until no further improvements can be made. The key idea behind gradient boosting is to set the target outcomes for this next model to minimize the error. The target outcome of each case in the data set depends on how much changing that case’s prediction impacts the loss.

The term ‘gradient’ in gradient boosting comes from the algorithm’s use of gradient descent to minimize the loss. When a decision tree is added, instead of predicting the actual target variable, it tries to predict the gradient of the loss function, hence the name ‘gradient boosting’.

Components of Gradient Boosting

The main components of Gradient Boosting include a loss function to be optimized, a weak learner to make predictions, and an additive model to add weak learners to minimize the loss function.

The loss function used depends on the type of problem being solved. It must be differentiable, but it can be customized to the specific requirements of the problem. The weak learner is typically a decision tree, but it can also be any learner that accepts weights on the training set and can be fit on a subset of the data.

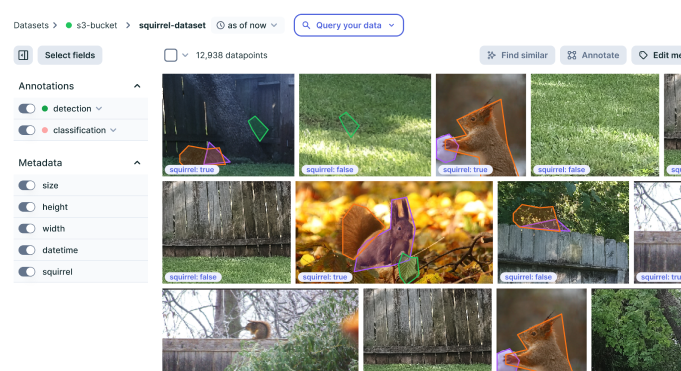

Improve your data

quality for better AI

Easily curate and annotate your vision, audio,

and document data with a single platform

Working of Gradient Boosting

Gradient Boosting involves three steps: initialization, iteration, and final prediction. In the initialization step, a base learner (often a shallow decision tree) is fit on the data. In the iteration step, a new weak learner is fit on the residuals of the base learner, and this process is repeated until a stopping criterion is met. In the final prediction step, the predictions of all learners are combined to produce the final prediction.

The iteration step is the most important step in Gradient Boosting. It involves fitting a new learner on the residuals of the base learner, which are the differences between the actual and predicted values. This step is repeated until a stopping criterion is met, such as the number of iterations reaching a maximum value, or the improvement in loss reaching a minimum value.

Advantages of Gradient Boosting

Gradient Boosting has several advantages that make it a popular choice for machine learning tasks, some of the most important ones are as follows.

Handling of Numerical and Categorical Data

Gradient Boosting can handle both numerical and categorical data. For numerical data, it can model complex non-linear relationships by using decision trees as weak learners. For categorical data, it can handle high cardinality categories without the need for one-hot encoding, which can lead to high dimensionality.

Moreover, Gradient Boosting can handle missing values in the data. It can do this by using surrogate splits in the decision trees, which are alternative splits that can be used when the primary split is not applicable due to missing values. Gradient Boosting is a robust method for dealing with real-world data that often contains missing values.

Modeling of Complex Non-linear Relationships

Gradient Boosting can model complex non-linear relationships using decision trees as weak learners. These non-linear relationships are modeled by dividing the feature space into regions and making predictions for each area. By combining multiple decision trees, Gradient Boosting can model complex non-linear relationships.

Moreover, by using gradient descent, Gradient Boosting can optimize any differentiable loss function. This allows it to model complex relationships that may not be captured by other methods. This makes Gradient Boosting a powerful tool for predictive modeling.

Disadvantages of Gradient Boosting

Despite its many advantages, Gradient Boosting also has some disadvantages. Some of the most common pitfalls of gradient boosting are as follows.

Tendency to Overfit

Gradient Boosting has a tendency to overfit the data, especially when the data is noisy or contains outliers. This is because it builds complex models by adding many decision trees. If the data contains noise or outliers, these decision trees can fit the noise or outliers, leading to overfitting.

To mitigate this issue, Gradient Boosting uses a technique called shrinkage, where the predictions of each tree are shrunk by a factor before they are added. This slows down the learning process, reducing the risk of overfitting. However, it also increases the computational cost and the number of trees needed.

Computational Cost and Parameter Tuning

Gradient Boosting has a high computational cost due to its sequential nature. It cannot be parallelized, meaning that it cannot take advantage of multiple processors to speed up the computation. This makes it slower compared to other methods that can be parallelized, such as Random Forests.

Gradient Boosting requires careful tuning of parameters, such as the number of trees, the depth of the trees, and the learning rate. This can be time-consuming, especially when the data set is large. However, there are automated methods for parameter tuning, such as grid search, random search, and bayesian optimization, which can help alleviate this issue.

Applications of Gradient Boosting

Gradient Boosting has a wide range of applications in various fields, some of its main use cases are as follows.

Use in Predictive Modeling

Gradient Boosting is commonly used in predictive modeling, where it is used to predict a target variable based on a set of features. It can be used for both regression and classification problems. For regression problems, it can predict a continuous target variable, while for classification problems, it can predict a categorical target variable.

Use in Ranking and Anomaly Detection

Gradient Boosting is also used in ranking, where it is used to rank items based on their relevance or importance. This is commonly used in search engines, where items are ranked based on their relevance to a query. Gradient Boosting can model the complex relationships between the features of an item and its relevance, making it a powerful tool for ranking.

Gradient Boosting is used in anomaly detection, detecting unusual patterns or behaviors. It can model normal behavior using a set of features and then detect anomalies by comparing the actual behavior with the modeled behavior. This makes it a powerful tool for anomaly detection.

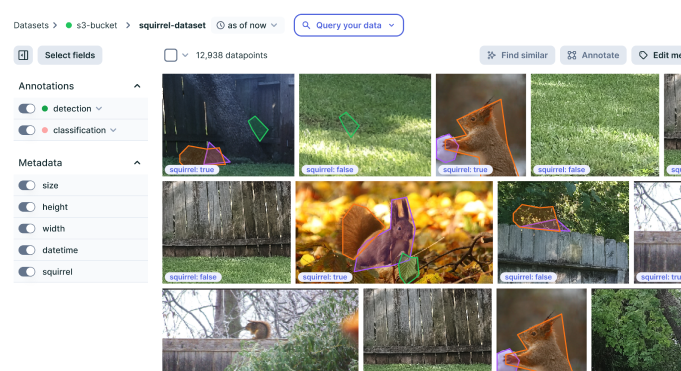

Improve your data

quality for better AI

Easily curate and annotate your vision, audio,

and document data with a single platform